Overview

Preliminary description - subject to changeThe Mach2 Supercomputer is a massively parallel shared memory supercomputer SGI UV3000 operated by the Johann Kepler University of Linz (JKU) on behalf of a consortium consisting of the JKU Linz, the University of Innsbruck (UIBK), the Paris Lodron University of Salzburg, the Technische Universität Wien, and the Johann Radon Institute for Computational and Applied Mathematics (RICAM).

Links

- JKU: MACH-2 Official Documentation by JKU Linz.

- JKU Linz IT Code of Operation and Usage

- JKU Application Form: User ID for Compute Server

- Description of Previous Mach Server

Many techniques described here are still valid - for changes, please see below.

Applying for an Account on Mach2

As a prerequisite, you need a valid user ID of the University of Innsbruck associated to an institute ("Institutsbezogene Benutzerkennung" - a student account "cs..." is not sufficient).

To apply for an account on Mach2, you need to fill in both:

- the standard UIBK HPC application form of the Focal Point Scientific Computing (UIBK approval process),

- and the JKU Application Form (see Instructions), whereby you commit to the JKU Linz IT Code of Operation and Usage.

After filling in your information in both forms, hand them to a member of the UIBK HPC team, and we will take care of the rest. The JKU form is required to have your original signature; a scan or copy will not be accepted by JKU administration.

Acknowledging system usage

University of Innsbruck users are required to acknowledge their use of MACH2 by assigning all resulting publications to the Research Area Scientific Computing in the Forschungsleistungsdokumentation (FLD, https://www.uibk.ac.at/fld/) of the University of Innsbruck, and by adding the following statement to the acknowledgments in each publication:

The computational results presented here have been achieved (in part) using the MACH2 Interuniversity Shared Memory Supercomputer.

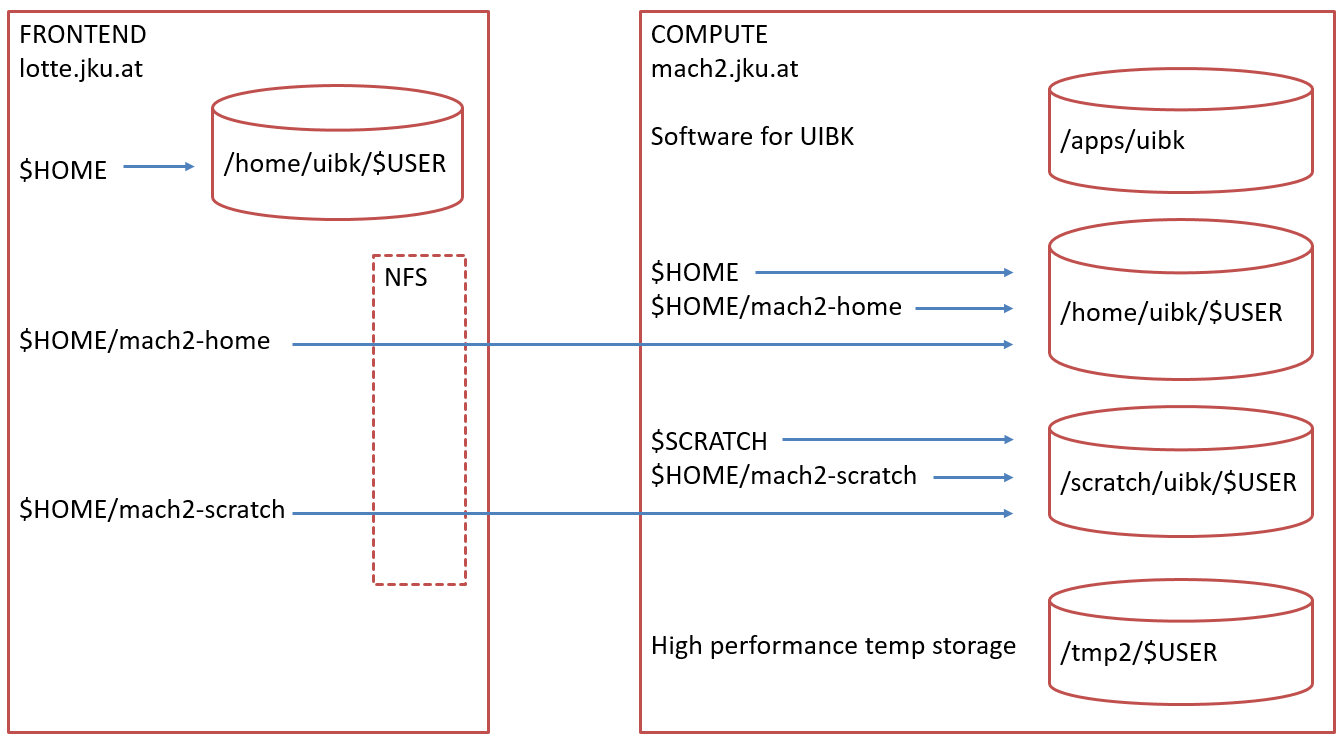

Setup of Hosts and File Systems

The MACH2 supercomputer system is designed and administrated by the IT Services of the Johann Kepler University (JKU) of Linz. It consists of two hosts:

- mach2.jku.at

Compute server. 1728 processors Intel Xeon E5-4650 (144 sockets with 12 cores each), 20TB Memory. File Systems:

- $HOME (default HOME directory),

- $SCRATCH (temporary storage on magnetic disks), and

- /tmp2 (high performance temporary storage on NVME drives).

- lotte.jku.at

Job management server. Used to submit jobs. 16 legacy processors Intel Xeon E5540, 70 GB Memory.

The system is set up such that logins to lotte should normally not be necessary.

Differences between Mach and Mach2

The setup of Mach2 is quite similar to that of Mach, and the description of Mach is still valid to a large degree. An adapted description for Mach2 will be created in this page later - until then please refer to the following list of differences, the official documentation of Mach2 by JKU Linz.

- On Mach2, use a password distinct from all other passwords.

- Please check the permissions of your

HOME and SCRATCH directories.

If they have been set up with group read

permissions for group uibk (all UIBK users) and you want

to restrict access to your own account,

please log on to lotte and issue the command

chmod 700 $HOME mach2-home mach2-scratch - Mach2 has 12 cores per socket, as opposed to 8 cores on Mach. As on Mach, PBS allocates resources in whole multiples of virtual nodes (vnodes). Each vnode corresponds to one socket, i.e. 12 cores. Memory is heterogeneous: 127 vnodes have 122 GB each, 16 vnodes are equipped with 244 GB. Large memory jobs will preferentially be placed on the vnodes with more memory, if available. Otherwise, they will be placed on any available vnodes. Please take this into account when specifying resource allocations for your jobs.

- Due to the inhomogeneous memory layout, the resource allocation will

work well only if you specify the number of CPUs and the amount

of memory. So please

use the full

-l select=N:ncpus=NCPU:mem=size[m|g]

option with realistic CPU and memory requirements for all your jobs. - Lotte has a CPU architecture different from Mach2, and software libraries are available only on Mach2. To build software, etc., you need to log on to Mach2 with ssh or qsub -I.

- Similar to Mach, on Mach2 please do not perform CPU- or memory-intensive work in SSH sessions. Use the batch system instead (qsub). For interactive work, start an interactive job (qsub -I). Note that every interactive job will consume a multiple of 12 CPUs during its entire lifetime, and in periods of high system usage may only start after a (possibly substantial) delay. So please do not forget to terminate your session after you are finished.

- Currently, job submissions and deletions are possible only on Lotte. As a workaround, on Mach2, we have wrapper commands qsub, qdel, and qsig which will execute the respective commands on Lotte via ssh. In any case, jobs will be executed on Mach2.

- Lotte's $HOME is not mounted on Mach2. Mach2's $HOME and $SCRATCH are mounted on Lotte. We recommend running Jobs using $SCRATCH. For details, please see above diagram.

- The NVME-based (solid state disk) file system /tmp2 is designed for temporary storage of data that are accessed using fine grained I/O (e.g. many small files or database type access patterns) by your programs. We recommend creating a directory mkdir /tmp2/$USER. Please delete your data from /tmp2 as soon as possible after use.

- Currently there are neither filesystem quota nor job resource limitations (beyond the ten days walltime run limit) on Mach2 (fair use principle). As a result, unintentional actions (such as creation of large amounts of data or starting a program using more memory than specified in the job statement) may adversely affect system operation for all users. Please transfer your data to your local machine after use and delete them from Mach2 as soon as possible.

- The PBS default queue is workq, using a CPU frequency of 2100 MHz. If your jobs can take advantage of overclocking (in particular when you are not using all cores of each socket), you may submit your jobs to the queue f2800, which causes the used CPUs to be clocked at 2800 MHz.

- Users are responsible for safeguarding all of their data. There are no file system snapshots or backups. The file systems $HOME and $SCRATCH are guarded against individual media failures by RAID6-type redundancy. The NVMEs making up /tmp2 form a RAID0 array, so loss of any individual media results in total loss of /tmp2. With these provisions in mind, still use the system under the assumption that your data may disappear any time without prior notice. Use rsync to backup important data to your workstation and delete no longer needed data from MACH2 as soon as possible.

- As on Mach, we are using Environment Modules to manage access to software. As part of an experimental setup, we are trying to supply as much software as possible using the SPACK software deployment system. Spack-managed modules are automatically activated upon logon to Mach2. Use spack list to get an overview of software items installable by Spack.

- We support only the bash shell. Specifically, we urgently ask you to refrain from csh scripting for a number of reasons that are still valid (short summary here). As of the 21st century, bash supports every feature that earlier UNIX shells were lacking in comparison to csh; so there exists no more reason to switch to csh.

Migrating from Mach to Mach2

The old Mach computer will be decommissioned for UIBK use within the first half of 2018 (tentatively by end of April). This section should help prepare your transition.

- Mach2 is a large shared-memory capability system and should be used only for activities that require this type of resource. If your jobs can be run successfully on conventional cluster systems, please consider switching to one of the LEO systems (Leo3 and Leo3e are currently operative, another cluster Leo4 will soon be added).

- The application process for Mach2 is described above. User accounts existing on Mach will not automatically be created on Mach2.

- Data transfer: there will be no data transfer from Mach to Mach2. Please copy all data that you still need from Mach to your local workstation and delete them from Mach.

- Since there is no data backup and no quota on Mach2, we kindly ask you to only keep your active working set on Mach2. All other data should be migrated to your local system as soon as convenient.

- As is typical for new machines,

the software portfolio on Mach2 is currently incomplete. We

will install new software based on user demand; if you need a

particular piece of software please open a ticket in the

UIBK Ticket System (Queue:

HPC) and state your request.

How fast can we help with software installations:

- Software that is open source,

available under the SPACK deployment system, and

can be installed with no errors, can usually be installed very

quickly. Use the command spack list [string] to list

all software products installable this way (use string to

limit search to products matching string).

Issue spack info name to get version info on name. - All other software (including failed SPACK installations) will be installed using our traditional procedures, which may take a while depending on HPC team workload.

- Due to licensing reasons, not all of the software listed under the JKU Mach2 documentation can be made available to UIBK users.

- Some third party software products are currently not installed on Mach2. Please open a ticket as described above if you need any of these software products specifically on Mach2.

- Software that is open source,

available under the SPACK deployment system, and

can be installed with no errors, can usually be installed very

quickly. Use the command spack list [string] to list

all software products installable this way (use string to

limit search to products matching string).

- The transition from Mach to Mach2 needs to be completed in a shorter period of time than usual. With your help, we hope we can finish the transition process by end of April 2018. We apologize for any inconveniences that may arise as a result of this time pressure.